The scientific renaissance of the 20th century saw the rise of quantum mechanics and information theory. In 1982, physicist Richard Feynman famously stated that it was not feasible to model a quantum phenomenon using classical computation; an alternative was required to accurately simulate such phenomena. Whilst Feynman’s statement didn’t garner much attention at the time, 12 years later, mathematician Peter Shor developed an algorithm that was too sophisticated for classical computers – facilitating the idea and possibility of quantum computing.

Quantum and classical computing both manipulate data to solve problems, but three fundamental principles distinguish quantum computing. While classical computers encode information in binary form of 0s and 1s, quantum computers are capable of surveying all the probable states of a quantum bit (qubit) simultaneously. This property of a quantum object is known as superposition. Two quantum entities are said to be entangled when neither can be described without referencing the other, as they lose their individual identities. The phenomena of interference, which occurs when two or more quantum states combine to produce an entirely new state, can be used to enhance the process of error-correction and the probability of measuring the correct output.

The way that quantum computers leverage probabilities and entanglement allows information to be encoded with increased precision across a large number of states simultaneously. Quantum computers show huge promise in terms of industrial and commercial applications in fields of chemical and biological engineering, drug discovery, cybersecurity, artificial intelligence, machine learning, complex manufacturing, and financial services. From being used to simulate complex chemical reactions to being used in aeronautical navigation, it is clear that quantum computers have huge unexploited potential that still needs to be explored.

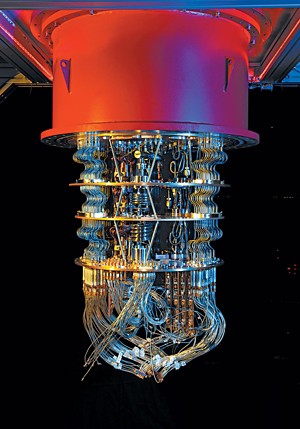

Despite their huge potential, scientists building quantum computers tend to run into two major hurdles. First, qubits need to be protected in near-zero temperatures from the surrounding environment. The longer the qubit lasts the longer its ‘coherence time’, therefore isolation is essential. Secondly, qubits need to be in an entangled state and should be controllable on demand for algorithm execution. Thus, finding the right balance between the states of isolation and interaction is difficult.

With the ability to revolutionise computation, there are multiple players involved in the corporate league of attaining quantum advantage. Leading this race of developing the most refined form of quantum computer are IBM, Google Quantum AI, and Microsoft.

Quantum computers show huge promise in terms of industrial and commercial fields.

In October 2019, Google confirmed that it had attained quantum supremacy, using their fully programmable 54-qubit processor Sycamore to solve a sampling problem in 200 seconds. This was surpassed earlier last year using an updated version of Sycamore with 70 qubits.

In December, IBM unveiled Condor, a new chip with 1,121 superconducting qubits, surpassing the 433-qubit capacity of their Osprey processor released in November 2022. Turning their focus towards the development of modular quantum processors with low error rate, the company also announced the Heron processor with 133 qubits and a record low error rate.

Despite the excitement surrounding the possible applications of quantum computers, it’s not time to celebrate just yet. Shor’s algorithm remains unsolved, and we are still a considerable distance away from having a system sophisticated enough for commercial use. However the rapid innovation and research within this field, along with the long-term advantage and potential of quantum computing, hints at a new and prosperous computing age.